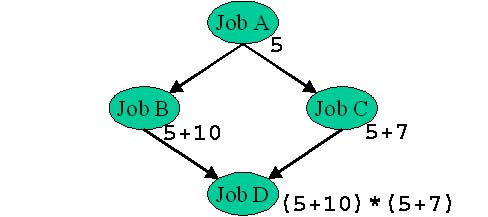

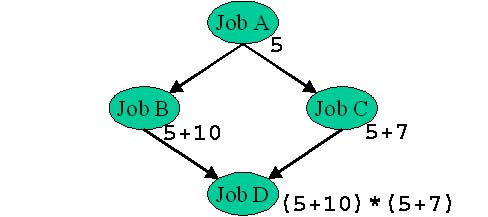

In this part we will create a more complex DAG. Our DAG will compute the value of (5+10)*(5+7)=180, in the following steps:

Note that in this example, B and C each depend on the output from A, and D depends on the output from B and C. We will use files to store intermediate results. The result of the node "D" is the final result of our computation.

We will have four distinct executables and four submit files (one for each node in the DAG).

Let's prepare the executables. (Here we'll be using shell arithmetics, i.e. $(( )) to perform our computations. Of course we could have used Perl, C, etc).

For those new to Unix this is what each line in the scripts below does:

$ cat > A.sh

#!/bin/sh

echo 5 > A.output

<Ctrl-D>

$ cat > B.sh

#!/bin/sh

A=`cat A.output` # NOTE! These are BACKWARDS apostrophes, i.e the key to the left of the "1" key (do not type this comment)

echo $((A+10)) > B.output

<Ctrl-D>

$ cat > C.sh

#!/bin/sh

A=`cat A.output`

echo $((A+7)) > C.output

<Ctrl-D>

$ cat > D.sh

#!/bin/sh

B=`cat B.output`

C=`cat C.output`

echo $((B*C)) > D.output

<Ctrl-D>

$ chmod +x *.sh

Each node in a DAG will have its own Condor submit file. Let's create them.

Note the submit files have a transfer_input_files / transfer_output_files options which tells Condor-G to transfer the input and result files to and from the remote machine.

$ cat > A.submit

executable=A.sh

universe=globus

globusscheduler=my-gatekeeper.cs.wisc.edu:/jobmanager-fork

error=results.error

log=results.log

notification=never

transfer_output_files=A.output

WhenToTransferOutput=ALWAYS

queue

<Ctrl-D>

$ cat > B.submit

executable=B.sh

universe=globus

globusscheduler=my-gatekeeper.cs.wisc.edu:/jobmanager-fork

log=results.log

error=results.error

notification=never

transfer_input_files=A.output

transfer_output_files=B.output

WhenToTransferOutput=ALWAYS

queue

<Ctrl-D>

$ cat > C.submit

executable=C.sh

universe=globus

globusscheduler=my-gatekeeper.cs.wisc.edu:/jobmanager-fork

log=results.log

error=results.error

notification=never

transfer_input_files=A.output

transfer_output_files=C.output

WhenToTransferOutput=ALWAYS

queue

<Ctrl-D>

$ cat > D.submit

executable=D.sh

universe=globus

globusscheduler=my-gatekeeper.cs.wisc.edu:/jobmanager-fork

log=results.log

error=results.error

notification=never

transfer_input_files=B.output,C.output

transfer_output_files=D.output

WhenToTransferOutput=ALWAYS

queue

<Ctrl-D>

Now we're ready to create a DAG. Make sure you understand what the "Parent ... Child ... " clauses are saying (look at the DAG picture above).

$ cat > ABCD.dag Job A A.submit

Job B B.submit

Job C C.submit

Job D D.submit

Parent A Child B C

Parent B C Child D

<Ctrl-D>

$ cat ABCD.dag Job A A.submit

Job B B.submit

Job C C.submit

Job D D.submit

Parent A Child B C

Parent B C Child D

$

We're ready to submit the DAG:

$ condor_submit_dag ABCD.dag

Checking your DAG input file and all submit files it references.

This might take a while...

Done.

-----------------------------------------------------------------------

File for submitting this DAG to Condor : ABCD.dag.condor.sub

Log of DAGMan debugging messages : ABCD.dag.dagman.out

Log of Condor library debug messages : ABCD.dag.lib.out

Log of the life of condor_dagman itself : ABCD.dag.dagman.log

Condor Log file for all Condor jobs of this DAG: results.log

Submitting job(s).

Logging submit event(s).

1 job(s) submitted to cluster 19.

-----------------------------------------------------------------------

condor_q -dag will organize jobs into their associated DAGs. Run it periodically to monitor the progress of the DAG. In the example below you can see the "condor_dagman" job and jobs B and C running.

$ condor_q -dag

-- Submitter: uml1.cs.wisc.edu : <198.51.254.123:35604> : uml1.cs.wisc.edu

ID OWNER/NODENAME SUBMITTED RUN_TIME ST PRI SIZE CMD

19.0 user11 4/12 15:25 0+00:01:48 R 0 2.3 condor_dagman -f -

21.0 |-B 4/12 15:26 0+00:00:00 I 0 0.0 B.sh

22.0 |-C 4/12 15:26 0+00:00:00 I 0 0.0 C.sh

Again, in separate windows you may want to run "tail -f --lines=500 results.log" and "tail -f --lines=500 ABCD.dag.dagman.out" to monitor the job's progress. The output of ABCD.dag.dagman.out should look something like this:

$ tail -f ABCD.dag.dagman.out

4/12 15:25:36 ******************************************************

4/12 15:25:36 ** condor_scheduniv_exec.19.0 (CONDOR_DAGMAN) STARTING UP

4/12 15:25:36 ** $CondorVersion: 6.6.1 Feb 5 2004 $

4/12 15:25:36 ** $CondorPlatform: I386-LINUX-RH72 $

4/12 15:25:36 ** PID = 8579

4/12 15:25:36 ******************************************************

4/12 15:25:36 Using config file: /home/user11/condor_local/condor_config

4/12 15:25:36 Using local config files: /home/user11/condor_local/condor_config.local

4/12 15:25:36 DaemonCore: Command Socket at <198.51.254.123:55191>

4/12 15:25:36 DAGMAN_SUBMIT_DELAY = 0 ("UNDEFINED")

4/12 15:25:36 argv[0] == "condor_scheduniv_exec.19.0"

4/12 15:25:36 argv[1] == "-Debug"

4/12 15:25:36 argv[2] == "3"

4/12 15:25:36 argv[3] == "-Lockfile"

4/12 15:25:36 argv[4] == "ABCD.dag.lock"

4/12 15:25:36 argv[5] == "-Dag"

4/12 15:25:36 argv[6] == "ABCD.dag"

4/12 15:25:36 argv[7] == "-Rescue"

4/12 15:25:36 argv[8] == "ABCD.dag.rescue"

4/12 15:25:36 argv[9] == "-Condorlog"

4/12 15:25:36 argv[10] == "results.log"

4/12 15:25:36 DAG Lockfile will be written to ABCD.dag.lock

4/12 15:25:36 DAG Input file is ABCD.dag

4/12 15:25:36 Rescue DAG will be written to ABCD.dag.rescue

4/12 15:25:36 Condor log will be written to results.log, etc.

4/12 15:25:36 Parsing ABCD.dag ...

4/12 15:25:36 Dag contains 4 total jobs

4/12 15:25:36 Deleting any older versions of log files...

4/12 15:25:36 Bootstrapping...

4/12 15:25:36 Number of pre-completed jobs: 0

4/12 15:25:36 Registering condor_event_timer...

4/12 15:25:37 Submitting Condor Job A ...

4/12 15:25:37 submitting: condor_submit -a 'dag_node_name = A' -a '+DAGManJobID = 19.0' -a 'submit_event_notes = DAG Node: $(dag_node_name)' A.submit 2>&1

4/12 15:25:37 assigned Condor ID (20.0.0)

4/12 15:25:37 Event: ULOG_SUBMIT for Condor Job A (20.0.0)

4/12 15:25:37 Of 4 nodes total:

4/12 15:25:37 Done Pre Queued Post Ready Un-Ready Failed

4/12 15:25:37 === === === === === === ===

4/12 15:25:37 0 0 1 0 0 3 0

4/12 15:25:57 condor_read(): timeout reading buffer.

4/12 15:26:32 Event: ULOG_GLOBUS_SUBMIT for Condor Job A (20.0.0)

4/12 15:26:32 Event: ULOG_EXECUTE for Condor Job A (20.0.0)

4/12 15:26:37 Event: ULOG_JOB_TERMINATED for Condor Job A (20.0.0)

4/12 15:26:37 Job A completed successfully.

4/12 15:26:37 Of 4 nodes total:

4/12 15:26:37 Done Pre Queued Post Ready Un-Ready Failed

4/12 15:26:37 === === === === === === ===

4/12 15:26:37 1 0 0 0 2 1 0

4/12 15:26:43 Submitting Condor Job B ...

4/12 15:26:43 submitting: condor_submit -a 'dag_node_name = B' -a '+DAGManJobID = 19.0' -a 'submit_event_notes = DAG Node: $(dag_node_name)' B.submit 2>&1

4/12 15:26:43 assigned Condor ID (21.0.0)

4/12 15:26:44 Submitting Condor Job C ...

4/12 15:26:44 submitting: condor_submit -a 'dag_node_name = C' -a '+DAGManJobID = 19.0' -a 'submit_event_notes = DAG Node: $(dag_node_name)' C.submit 2>&1

4/12 15:27:24 assigned Condor ID (22.0.0)

4/12 15:27:24 Event: ULOG_SUBMIT for Condor Job B (21.0.0)

4/12 15:27:24 Event: ULOG_SUBMIT for Condor Job C (22.0.0)

4/12 15:27:24 Of 4 nodes total:

4/12 15:27:24 Done Pre Queued Post Ready Un-Ready Failed

4/12 15:27:24 === === === === === === ===

4/12 15:27:24 1 0 2 0 0 1 0

4/12 15:27:59 Event: ULOG_GLOBUS_SUBMIT for Condor Job C (22.0.0)

4/12 15:27:59 Event: ULOG_EXECUTE for Condor Job C (22.0.0)

4/12 15:27:59 Event: ULOG_GLOBUS_SUBMIT for Condor Job B (21.0.0)

4/12 15:27:59 Event: ULOG_EXECUTE for Condor Job B (21.0.0)

4/12 15:28:04 Event: ULOG_JOB_TERMINATED for Condor Job C (22.0.0)

4/12 15:28:04 Job C completed successfully.

4/12 15:28:04 Of 4 nodes total:

...

Watching the logs or the condor_q output, you'll note that the final node ("D") wasn't run until both of the "B" and "C" are finished.

Examine your results. There are many files here. They can be helpful for debugging:

$ ls A.output A.sh A.submit ABCD.dag ABCD.dag.condor.sub ABCD.dag.dagman.log ABCD.dag.dagman.out ABCD.dag.lib.out B.output B.sh B.submit C.output C.sh C.submit D.output D.sh D.submit results.error results.logProvided that everything ran successfully, and we have no errors in our logs (mainly ABCD.dag.dagman.out), the result of the final computation is the output of node D. Therefore it should be in D.output.

$ cat D.output 180It worked!!!! Behold the power of Grid computing!

If you got a different number as output, you might have incorrectly specified filenames in your submit files, which would result in one of the script reading an intermediate computation values incorrectly.

Clean up your results.

$ rm ABCD.dag.* $ rm results.* $ rm *.output