Description of Program: The Live Action Monster is a series of C++ and Python programs designed primarily to add live action elements into an animation. The basic concept of LAM is to composite one image on top of another, so it can be used for purposes other than live action. One of the other uses of the monster is to have a scene rendered over several images, and composite each rendered frame together. This is extremely useful in cases when the scene is very visually complex.

Method for Capturing Live Action Data: Live actors were individually videotaped on Digital Video (DV) in front of a "blue screen" (blue fleece blanket). The blanket was attached to a bulletin board in one of the rooms in the Computer Science Building. An example of the original recording is shown during the credits of the animation. Examples of the final segmentation are below. The original AVI file is on the harddisk that is going into the new graphics machine (I forgot to grab a copy of it before the harddrive was taken.

Method for Segmenting Live Action Data: Since the video was recorded in front of a known blue screen, it was possible to use some color information in the segmentation process, however, color was not enough. The general strategy for segmentation was:

1. Each pixel was subtracted from a corresponding pixel in an image known to contain only the blue screen (no actor).

2. Each pixel was marked as blue screen if: BLUE < k*(RED+GREEN), where k is some constant, i.e. there is less blue in the pixel than red and green If a pixel is both different from the blue screen only image (1) and there is less blue than red and green (2) then that pixel was marked as actor. This method gives a reliable means of separating the actor from the blue screen. Finally, the segmented image is decimated, so that the actor will fit in the rendered frame of the animation.

Compositing Live Action: The segmented image is assigned 3 values: x-offset, y-offset, and depth, within the rendered animation frame. These values can be set by hand, or by "sliding" the segmented image around the in the rendered frame. Once the values are determined, each frame from live action is composited into the rendered animation frames.

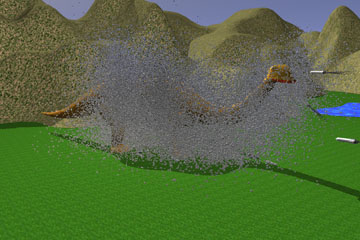

Compositing Several Rendered Frames: Often times it is convenient to render a scene in several passes, i.e. render particle systems separately from the rest of the scene. We did this blood, and the Live Action Monster was provided to another group in order to composite dust particles into the final animation. Each scene is rendered two or more times, with different objects having visibility turned on for different renders. The depth information in the frame is then used to composite two frames together, the process can be repeated if more than two frames need to be composited.

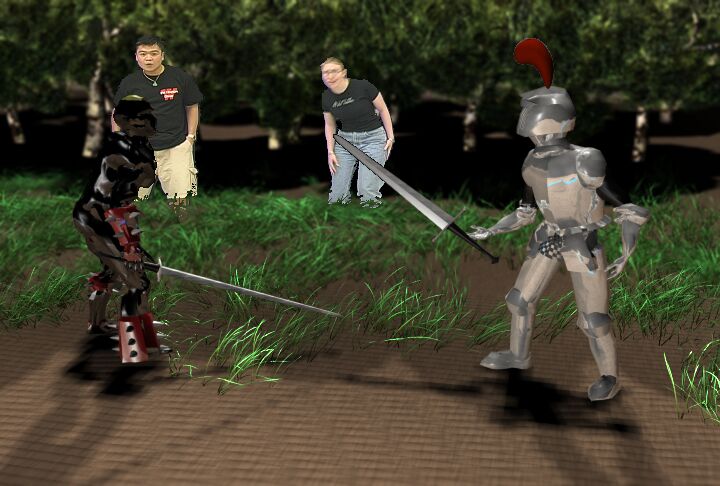

Live Actors, Nguyen Tran (Left) and Allison McCarthy (right), after having the background removed.

The live actors have been composited into the scene at a depth between the grass the tree's. When a cartoon actor goes in front of the them, the live actors will be occluded.

The blood in this scene was rendered seperately, and then composited, along with the live actors, to form the final frame.

A dust cloud composited over Gertie the Dinosaur, from "Gertie, Interrupted." This image consists of 11 seperate particle systems. Particle systems were rendered seperately and then composited together into one final image. This works around the problem of rendering machines running out of memory.