Insight: Capturing mobile experience in Internet-scale application deployments

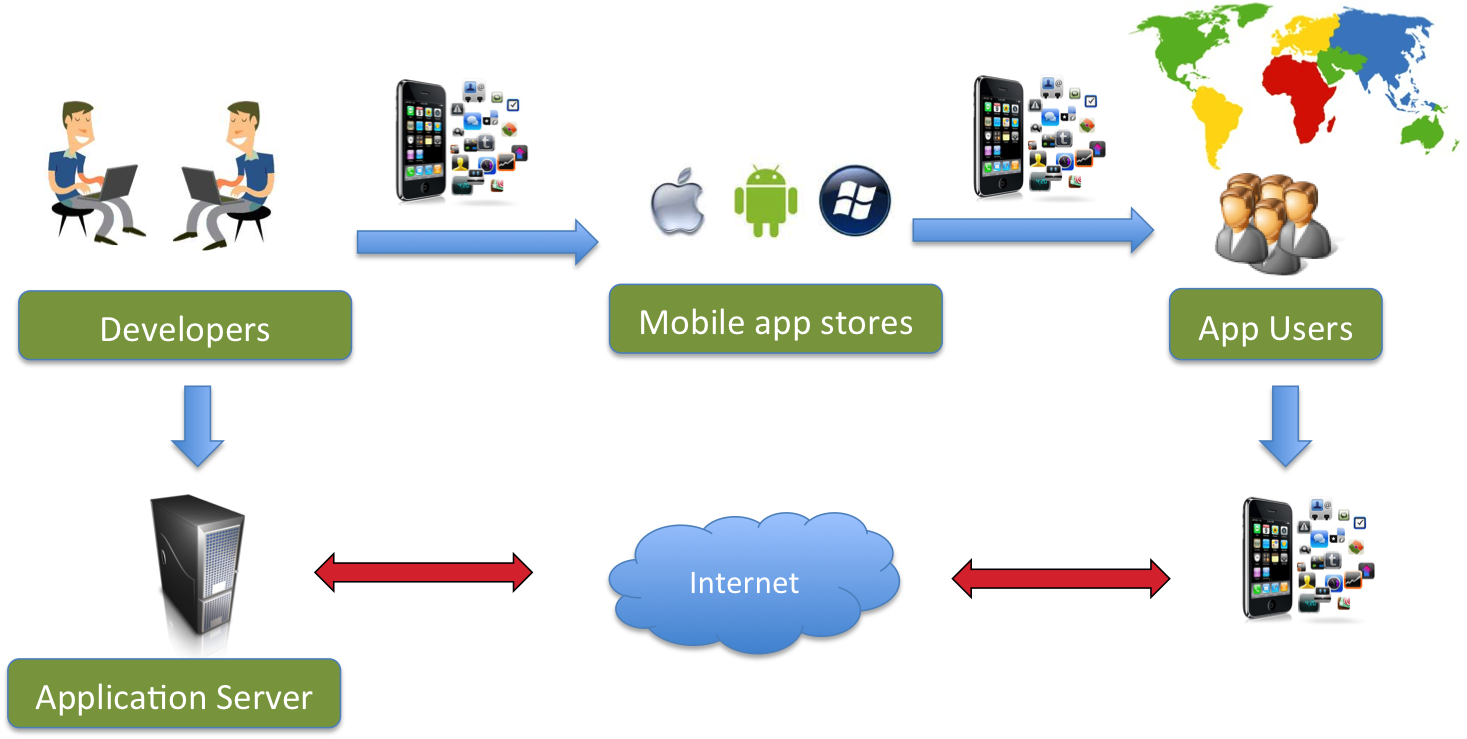

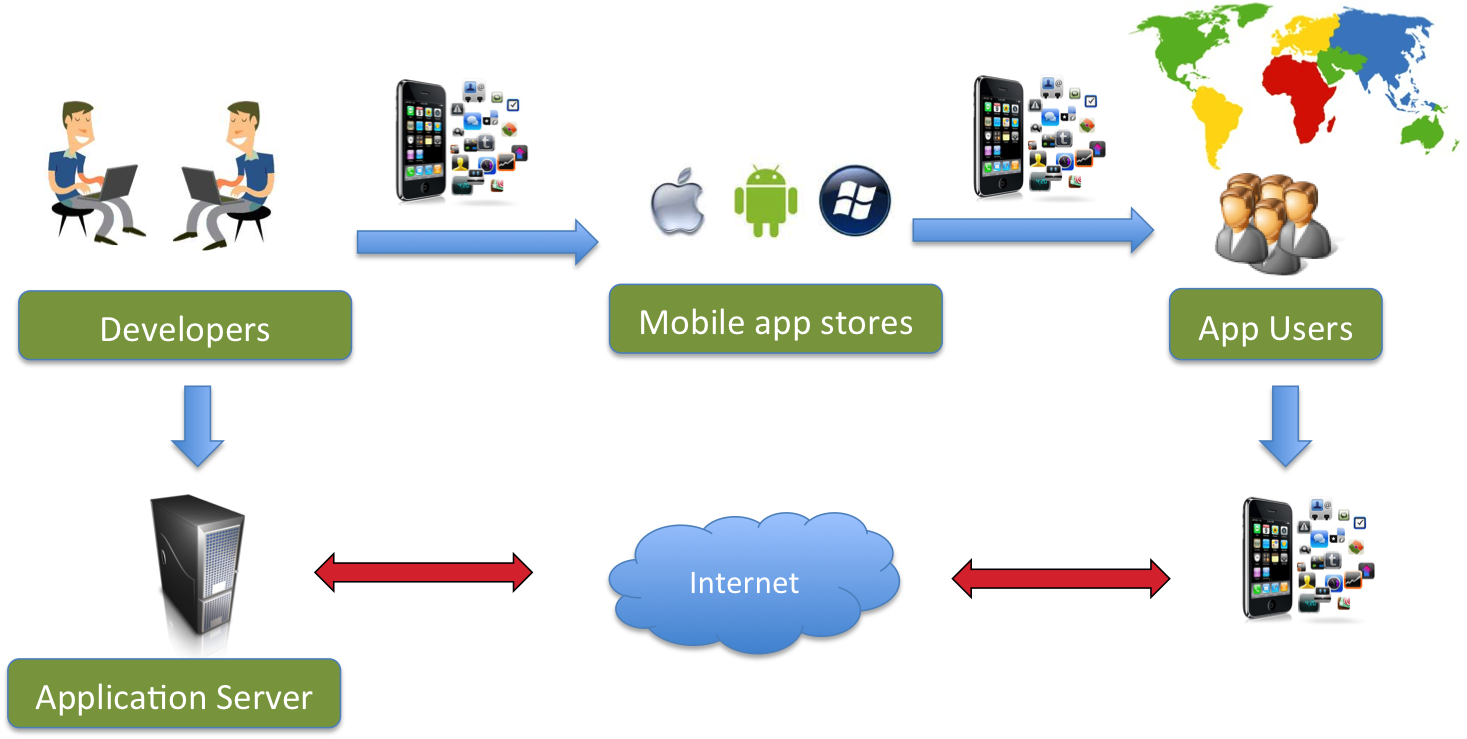

Mobile applications are the talk of the town with hundreds of thousands of applications populating different app stores and billions of downloads of these applications.

Prior to releasing an application, the developers may have some pre-conceived notion of how their applications are likely to be used,

what aspects of the application may increase its popularity and what might even help them generate more revenues.

Once released, these applications potentially

get deployed across a large range of devices, users, operating environments, and may cater to different social as well

as cultural usage patterns.

The success and adoption of an application can be impacted by a large variety of factors, e.g., network latency and bandwidth experienced, responsiveness of the application to user requests, or even how accessible certain UI components are in the various display screens.

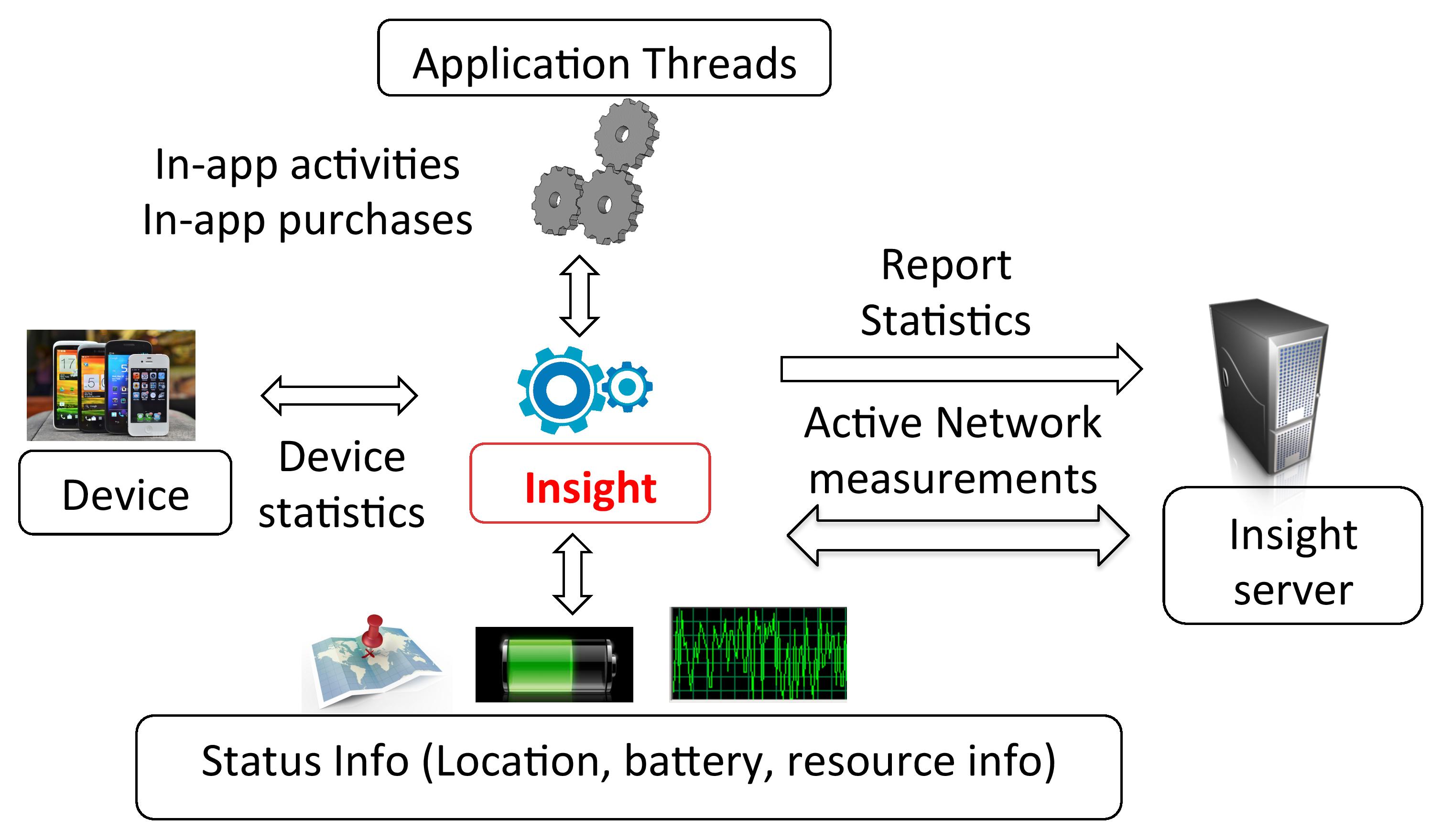

In order to capture the application experience of users, we have developed a toolkit library, called Insight,

and have invited various developers to install this library into their application.

Our approach to analyze apps is quite

similar to a few commercial analytics solutions (e.g., Flurry, Localytics, KISSmetrics)

that focus on providing business analytics to the users (e.g., number of downloads, geographical locations of users etc.).

Insight goes further than some of the commercial toolkits to

correlate network-layer and device-related factors that impact application usage experience of users.

For example, Insight performs light-weight network latency measurements during the application sessions.

Thus, by tracking in-app purchases made by users and the network quality statistics of sessions

in which these purchases were made, developers can infer how user revenue may be impacted by quality across different networks.

In this first-of-its-kind academic study, Insight has served as a distributed lens from inside applications

with greater than one million users over more than three years.

|

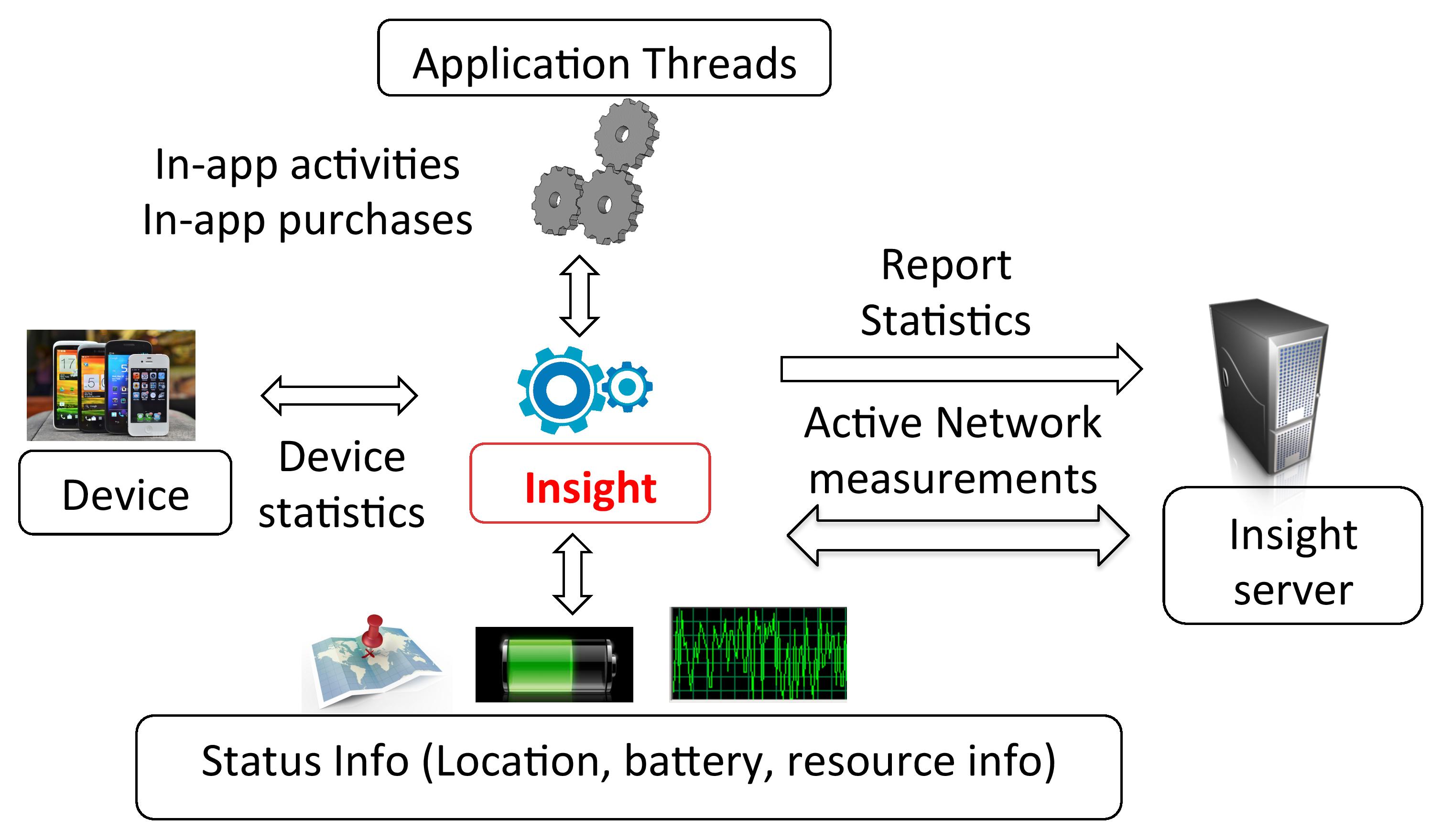

Figure 1: A typical mobile application deployment and usage scenario.

|

Insight Framework Details

The statistics collected by Insight can be grouped into the following categories. It is important to note that developers have the flexibility to selectively enable or disable any of the following measurements. So, it is upto the developers to determine the set of metrics that they wish to capture.

- Session Data: The duration of an application's usage in the foreground is denoted as a session. A session

ends if the application is closed or pushed to the background.

- Device Information:Insight also records the device related information (e.g., model, platform, screen brightness, available network interfaces, signal strengths) per session.

- Network Data: Insight collects periodic network performance data by performing light-weight measurements using both TCP and UDP.

When a user starts the application, \pk~initiates a TCP connection with our measurement server (co-located with the actual application servers).

The server sends probes to the applications at a configurable interval using TCP and UDP packets with increasing sequence numbers.

These probes elicit immediate responses from the application allowing

computation of both UDP and TCP based network latencies or Round Trip Times (RTTs). These RTTs allow us the measure the quality

of the network link (e.g., cellular or WiFi) between the server and user device. A high value of network latency (or RTTs) indicates the presence of

a poor network link.

We chose a 30 second time-interval as it provides reasonable measurement accuracy while minimally affecting a mobile phone's resources.

- Location Data: While the app has access to precise user location through GPS/WiFi, Insight has the option to collect only

coarse-grained location information (100 sq. km. regions, state level or country level).

- Resource Consumption Statistics: Insight also measures the resource overhead of the application on user devices by capturing a set of metrics.

These metrics include the CPU and memory utilization by the application as well as the "battery drain rate" on the device while the application is

in the foreground.

The battery drain rate is calculated per session (while the application is in foreground) by dividing the change in battery level during a session

with its duration. For example, if the battery level changes from 80\% to 75\% during a

session length of 20 minutes, the battery drain rate is (80 -75)/ 20 levels/minute, i.e., 0.25 levels/minute.

The overhead of this measurement is minimal on the device as well as the application as it is passive and performed

infrequently (every 30 seconds by default).

- Application-specific Data: In addition to the above

generic data, Insight also provides an API to the applications to log

any additional data. The application code calls this API periodically

to record app-specific statistics. This data is later combined with

the other statistics (e.g., device, network performance) collected by

Insight to study their correlation with the in-application activity.

|

Figure 2: Statistics collected by Insight during an application’s usage through passive and active measurements.

Figure 2: Statistics collected by Insight during an application’s usage through passive and active measurements.

|

Insight Code + Data + Tools

Setup your own mobile application analytics framework!

The Insight toolkit code from our CoNEXT 2013 paper is now available on GitHub: Android client code and Insight server code

Click here

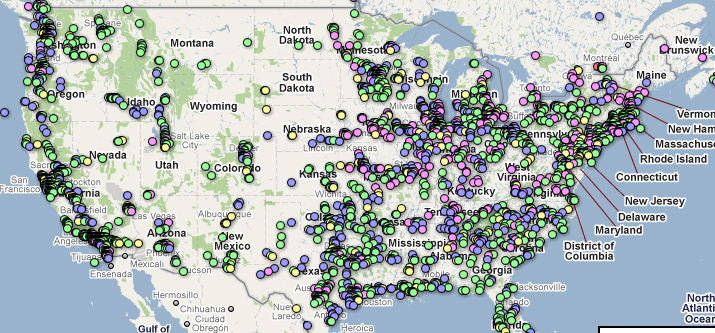

to view a snapshot of cellular network performance results.

Also checkout the NetworkTest iPhone and Android applications to compare your network performance in your region:

|

|

Publications

People

Figure 2: Statistics collected by Insight during an application’s usage through passive and active measurements.

Figure 2: Statistics collected by Insight during an application’s usage through passive and active measurements.