Motion Graphs

Download the paper (PDF)

Summary

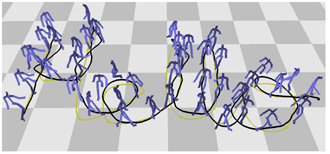

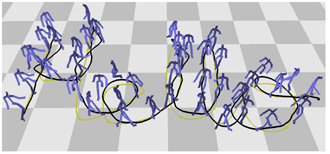

This work takes a step toward the goal of directable motion capture. Given a database of motion capture,

we automatically add seamless transitions to form a directed graph that we call a motion graph. Every

edge on this motion graph is a clip of motion, and nodes serve as choice points connecting clips. Any

sequence of nodes, or walk, is itself a valid motion. A user may exert control over the database by

extracting walks that meet a set of constraints.

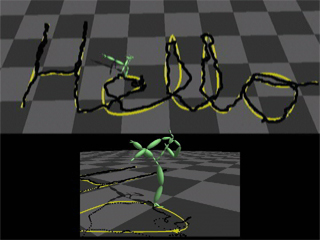

Path Fitting

In this work we focus on the problem of directing locomotion down user-specified paths. That is, the

user draws a curve on the ground and we extract from the motion graph a motion that travels along this path.

The following video shows some sample results:

(AVI, 37 MB, 1:46)

- First we show a simple example from a motion graph built out of a single, 12-second "sneaking" motion,

along with the reflection of that motion. Even with such a small dataset, we can create nontrivial new motions.

- Second is an example from a larger walking dataset. The walking motion itself is about 54 seconds long,

and it took about 57 seconds to generate. In general, in our experiments it took about as long to

compute a motion as it took to play it.

- Next we fit to the same path, but this time using a dataset involving martial arts motions. Note

that our methods work for motions that are not obviously locomotion.

- Finally, we show fits to another complicated path.

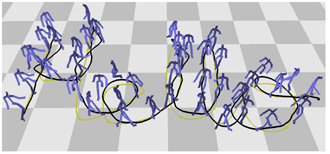

Path Fitting with Multiple Styles

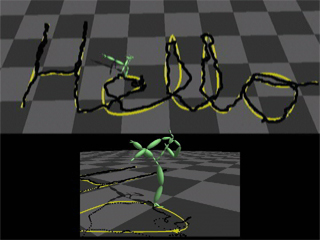

The previous video showed examples where the character was performing only one kind of action while

traveling down the path ("walking", "sneaking", etc.). However, we can also require the character

to do different things on different portions of the path. The following video shows some results:

(AVI, 23MB, 1:47)

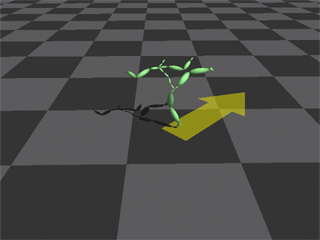

- We start with a simple example. The character is to walk on the yellow portions of the path,

sneak on the green portions, and perform kicks on the purple portions.

- Next is a second example from the same walk-sneak-fight dataset. There are a total of six

possible style-to-style transitions (e.g., walk-to-fight), and this motion shows an example of each.

- Finally, we show a fit to a more complicated path.

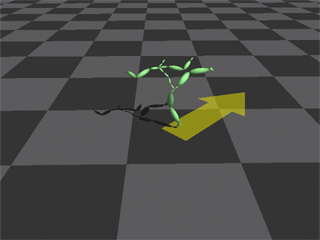

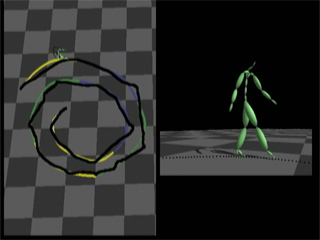

Interactive Control

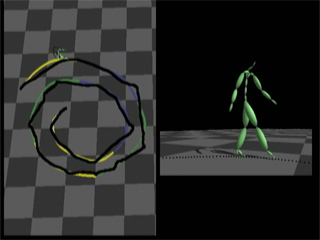

We can use our technique to interactively control a character. For each node in the motion graph,

we precompute fits to paths in a sampling of directions and store the first edge of each resulting graph

walk. In effect we then have a lookup table that tells us which edge to take next given that we

want to travel in a particular direction. The following video shows some results; it is being recorded

interactively. Note that there is a delay between when the desired direction changes and the character

itself changes direction. This is because it in general takes a bit of time for the character to

position himself on the motion graph so as to perform the desired action.

The following video shows the technique applied to two datasets, one with walking motions and one

with kicking motions.

(AVI, 9.9MB, 0:37)