Abstract: This project generates arbitrary size scenes based on the locations of objects in a sample of the scene. For example, an arbitrarily-sized forest can be grown from a large enough fixed sample. We use a simple overlapping window technique with a Hausdorff metic in order to grow such scenes.

With the wealth of data available, we should be able to exploit what we know in order to generate pseudo-realistic scenes with little effort. For example, if you know the basic layout of a forest, it shouldn't be hard to generate a larger version of the same scene because the structure of such scenes is semi-regular. Other examples of such semi-regular scenes are cityscapes and partly cloudy skies. While such scenes could also be simulated, such a simulation would be specific to the scene studied but would probably also be more time-intensive. We propose a quicker, more general method that simply depends on a sample of such a scene.

In order to do this, we use a method that is similar to texture synthesis but has some important differences. In texture synthesis, we seek to extend the texture while keeping a similar pattern. With scene generation, we seek a pattern of locations. Thus, we can think of scene generation as texture sythesis in a sparse set of irregular points. These differences pose some difficulties including the fact that our metric must try to match not only the location of the points but also the number of points.

We use a Hausdorff metric to check how well a sampled piece of the scene fits into the larger part of the scene. This metric will measure the difference between two sets of points by finding the maximal distance of the closest point in the target set to the given set. We use the metric in both directions, ie we compute H(A,B) and H(B,A), to ensure that the number of points is considered. With this metric, it is fairly straightforward to start generating scenes.

Scenes with only one type of object

To begin, we considered a scene that is composed of only one object. As an example, consider a forest of all pine trees. Recall that we begin with some sample of what the forest looks like and desire to extend the sample. To do this, we pass a window over the scene that both overlaps the current scene and extends past the current scene. In this window, we know the bottom half and desire to find a suitable upper half. We can do this by simply dragging a similarly-sized window over the sample space and choose the location that minimizes the Hausdorff distance. Then we can fill in the rest of the window we wish to complete with the found match.

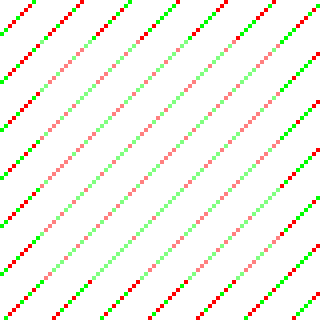

We can represent this scene as a map of locations, and so all we need is a two-dimensional picture to show how the method works. The following images show the sample scene along with the extended scene.

|

|

| Sample scene | Generated scene |

Scenes with more than one type of object

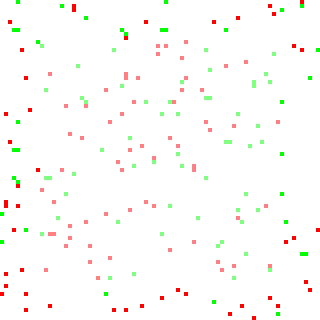

In reality, we wish to extend scenes that contain a variety of objects. In order to this, we add a dimension to our points. We can compute the Hausdorff distance for points of any number of dimensions, so this doesn't pose any problems computationally. The third coordinate then represents the type of object at the given location. We also introduce a group factor that determines how much emphasis we would like to place on the type of object with respect to the location. There are some times when we wish to keep the structure but more often we wish to make sure that the densities of objects are respected.

To generate this type of scene, we need to choose the group factor along with the number of different types of objects in the scene. Some examples appear below.

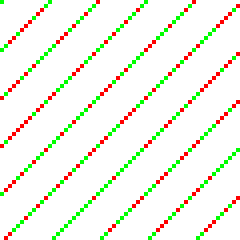

|

|

| Sample scene | Generated scene |

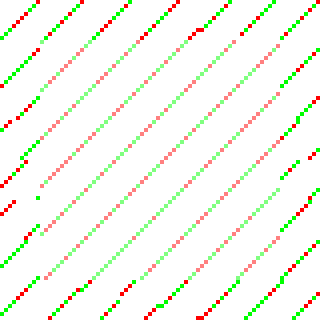

|

|

| Sample scene | Generated scene (group factor = 1) |

|

|

| Sample scene | Generated scene (group factor = 5) |

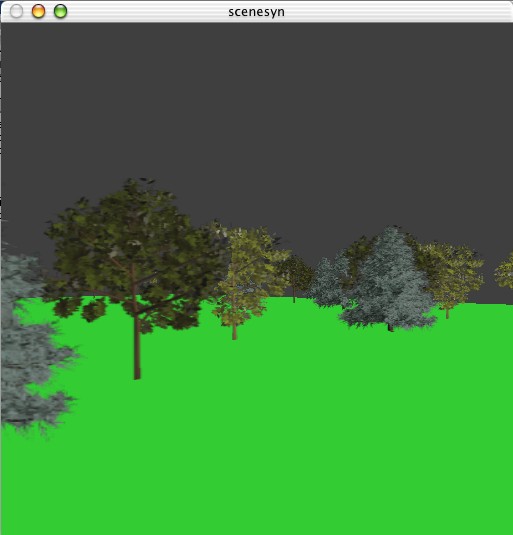

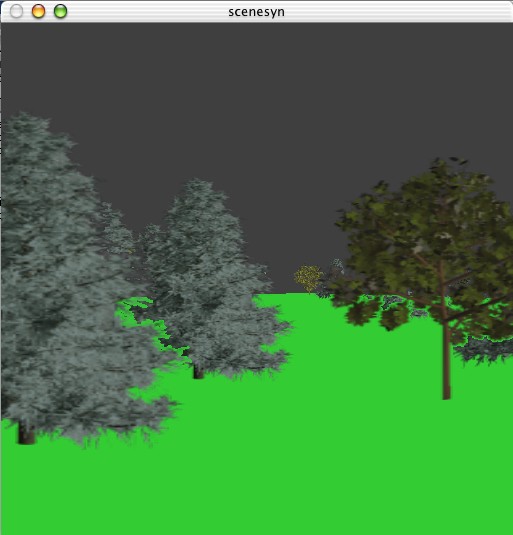

On the fly generation Finally, this technique really isn't that interesting unless we can use it on the fly. We would like to allow a user to wander through a forest of trees forever, but we also don't want the scene to become too repetitive. We use the idea of scene generation above, but modify it so that the scene is only grown in the direction we care about.

Using OpenGL, we present a billboard model of a forest and as we walk through it, we extend the scene in a given direction as needed. It should be noted that we only add windows from the given sample; we do not sample from the generated parts of the scene. Preliminary results appear below. This technique does not run in real-time given the current algorithm, but we use a very slow algorithm to search for windows so it should be relatively simple to convert this an online algorithm.

|

| Screenshot 1 |

|

| Screenshot 2 |

Finally, we would like to extend this technique so that given both the locations and types of objects in a sample scene and a set of high-quality images from various viewpoints in the sample scene, we can generate a infinite high-quality scene from the given images using the scene generation algorithm and image-based rendering.